A neural processing unit (NPU) is a microprocessor that specializes in the acceleration of Machine Learning algorithms, typically by operating on predictive models. It is also known as neural processor.

Some of the applications of Machine Learning include:

Our customer has developed a low-power NPU for image recognition.

This chip is fully programmable because it is currently deployed on FPGA but some limitations appear in terms of autonomy, execution speed and size of the neural network .

For use cases where the algorithm never changes, using a FPGA may not be the best approach. Indeed, factors such as high power consumption and chip size may require deployment of the system using an ASIC device.

That’s why the customer contacted our design team to assess the conversion of his FPGA to an ASIC.

During the feasibility study, IC’Alps presented the technical, economic, and time related elements which were valuable for the customer to assess the viability of this FPGA to ASIC conversion project in 55 nm.

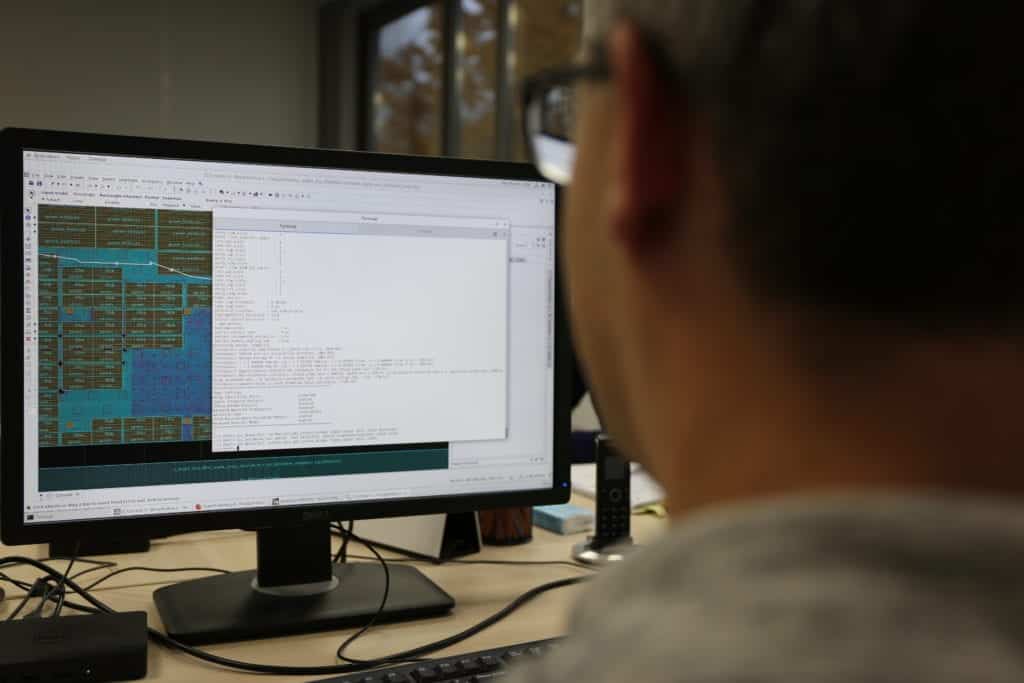

First, using the RTL code provided by the customer, the IC’Alps team extracted a first estimate of NPU performance on ASIC target (post synthesis results).

Our physical implementation experts then recommended some modifications of this first iteration of RTL code to ease the migration process to an ASIC while optimizing the performance.

As part of this project, the supply chain team also provided an assessment of costs and timeline for development, industrialization and production of the custom ASIC in 55 nm technology.

Thanks to the implementation of the suggested modifications in the RTL code, we were able to divide the area of the neural processor by 2 and its consumption by 8 compared to the initial netlist, while reaching a speed of 200 MHz with ASIC implementation versus 100 Mhz on FPGA.